Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on the interaction between computers and humans through natural language. It involves the development of algorithms and techniques that enable computers to understand, interpret, and generate human language. NLP has become increasingly important in recent years due to the explosion of textual data on the internet, social media, and other digital sources.

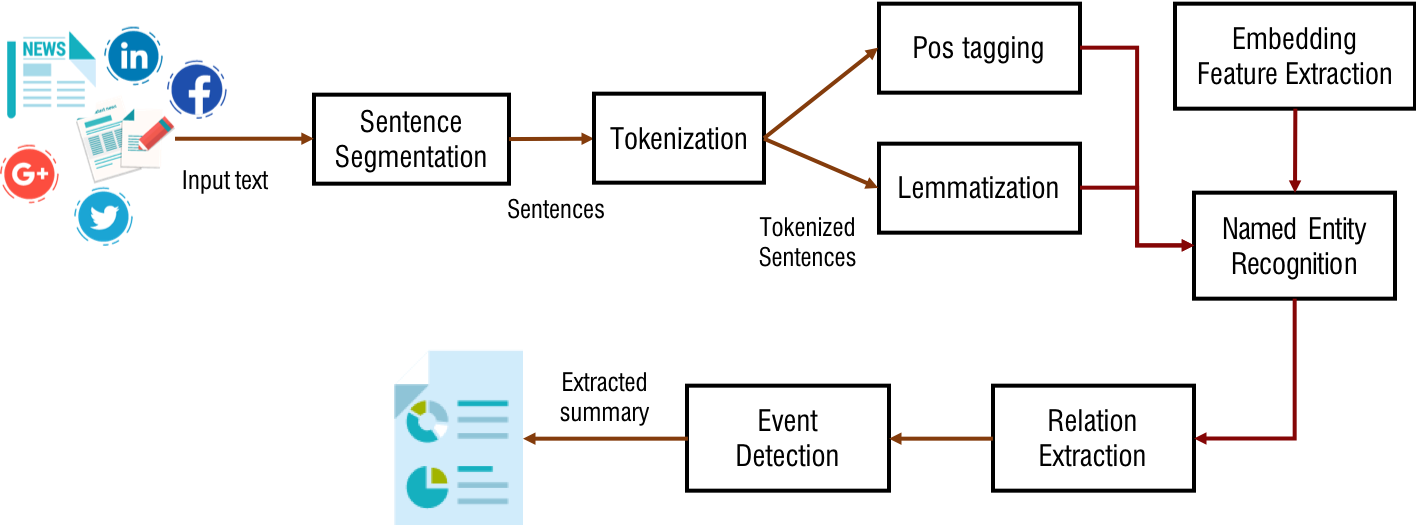

NLP architecture refers to the design and structure of NLP systems, which are composed of various components that work together to perform specific tasks. The architecture of an NLP system typically involves the following components:

- Text Preprocessing: This component involves the cleaning and normalization of raw text data. This includes removing unwanted characters, converting text to lowercase, and removing stop words (common words like "the" and "and" that do not carry significant meaning).

- Tokenization : This component involves breaking down the text into individual tokens or words. This is a critical step in NLP, as it allows the computer to process and analyze each word separately.

- Part-of-Speech (POS) Tagging : This component involves labeling each token with its corresponding part of speech (noun, verb, adjective, etc.). POS tagging is important for many NLP tasks, such as named entity recognition and sentiment analysis.

- Named Entity Recognition (NER) : This component involves identifying and categorizing named entities (such as people, places, and organizations) in the text.

- Sentiment Analysis : This component involves analyzing the sentiment or emotion expressed in the text. This can be useful for applications such as customer feedback analysis and social media monitoring.

- Language Modeling : This component involves building a statistical model of language, which can be used to predict the likelihood of a given word or sequence of words.

- Machine Translation : This component involves translating text from one language to another. Machine translation is a complex task that involves many of the other components in the NLP architecture.

- Text Generation : This component involves generating new text based on input data. This can be useful for applications such as chatbots and automated content creation.

Overall, the architecture of an NLP system is highly dependent on the specific task it is designed to perform. However, most NLP systems will include some or all of the above components.

In addition to the core components mentioned above, NLP systems may also include other components such as:

Dependency Parsing : This component involves analyzing the syntactic structure of a sentence by identifying the relationships between words.

Coreference Resolution : This component involves identifying and linking pronouns to their corresponding nouns in the text.

Topic Modeling : This component involves identifying the topics present in a text corpus and categorizing them accordingly.

Information Extraction : This component involves extracting structured data from unstructured text, such as extracting names, dates, and other important information.

Question Answering : This component involves answering questions posed in natural language by extracting relevant information from a text corpus.

The architecture of an NLP system may also vary depending on the type of data it is processing. For example, speech recognition systems may require additional components such as audio signal processing and speaker identification.

There are several approaches to building NLP systems, including rule-based approaches, statistical approaches, and machine learning approaches. Rule-based approaches involve manually defining rules for processing text, while statistical approaches involve building models based on statistical analysis of large text corpora. Machine learning approaches involve training models on labeled data and using them to make predictions on new data.

In recent years, deep learning approaches, such as neural networks, have become increasingly popular in NLP due to their ability to automatically learn complex patterns in text data. These models have achieved state-of-the-art performance on many NLP tasks, including language modeling, sentiment analysis, and machine translation.

In summary, the architecture of an NLP system is a complex combination of various components that work together to process and analyze natural language data. The specific components included in the architecture will depend on the task at hand and the type of data being processed. Advances in deep learning and other machine learning techniques have enabled NLP systems to achieve unprecedented levels of accuracy and performance on a wide range of tasks.